Summary:

In this project, the goal was to predict customer churn based on a pre-existing dataset. Customer churn refers to when customers stop using a service or product, and predicting this can help businesses take proactive actions to retain customers. Three machine learning algorithms were implemented and compared: 1- XGBoost (Extreme Gradient Boosting) 2- Random Forest 3- Logistic Regression The dataset included various customer attributes such as demographic information, usage patterns, and service history, which were used as features to predict whether a customer would churn.

Technical Notes: The ready-made dataset contained 7,043 rows of customer data. For model evaluation:- 1- As an initial step, all categorical variables were converted into numerical values to make the dataset suitable for the algorithms. Additionally, certain columns were dropped to simplify the dataset. 2- In each iteration, 100 random rows were selected as the test dataset, while the remaining rows were used as the training dataset. 3- This process was repeated 10 times, with a new set of 100 test rows selected each time, ensuring variability in model evaluation. 4- The accuracy for each algorithm is reported as the mean of these 10 runs, providing a more robust evaluation of performance.

Improvement Areas: To improve the performance of the models in future iterations, several areas can be considered:

1- More Data and Features: Increasing the amount of data available for training can help the algorithms generalize better and improve accuracy. Including more customer features, such as additional demographic or behavioral data, can provide more context for prediction. 2- Categorical Grouping: Some columns divide customers into several groups (e.g., 5 different categories). Instead of dropping these columns, we can map numbers to these groups (e.g., 1 to 5) and use them as numerical inputs to the algorithms. This would allow us to preserve more information from the dataset and improve the models’ ability to learn patterns from diverse customer groups.

Short Description Of Each Algorithm:

- XGBoost (Extreme Gradient Boosting):

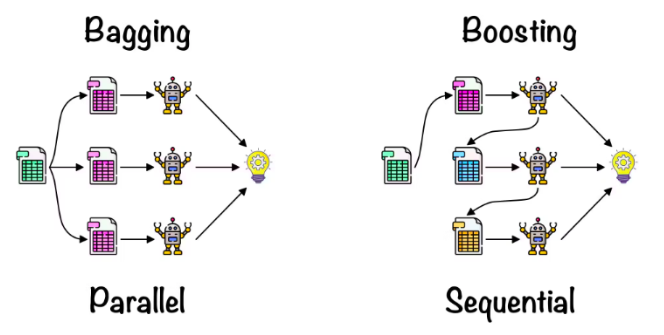

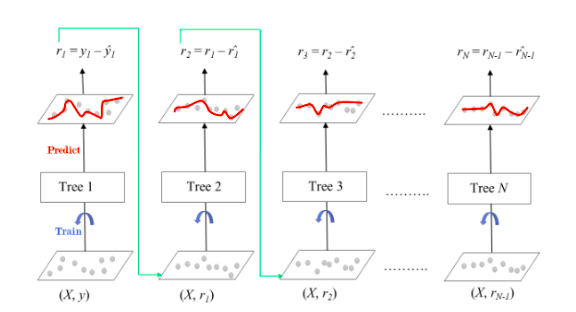

XGBoost is a highly efficient and scalable implementation of gradient boosting designed for speed and performance. It builds an ensemble of decision trees, where each new tree corrects the errors made by the previous ones. XGBoost uses regularization to prevent overfitting and employs techniques like tree pruning and handling missing values efficiently.

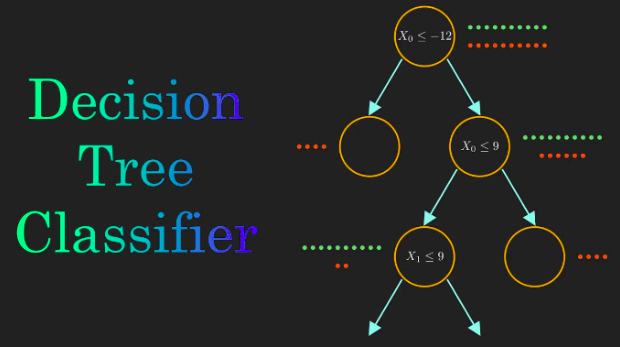

- Random Forest:

Random Forest is an ensemble method that builds multiple decision trees (usually hundreds or thousands) during training. Each tree is trained on a random subset of data and features, and the final prediction is made by averaging the outputs (for regression) or majority voting (for classification). This method reduces overfitting and improves generalization.

- Logistic Regression:

Logistic Regression is a simple and commonly used linear model for binary classification problems. It models the probability of an outcome as a function of the input features using the logistic function. Unlike decision trees, logistic regression assumes a linear relationship between the features and the log odds of the target.

Conclusion: Despite being a simple model, Logistic Regression was the most effective algorithm for this project due to the binary nature of the target variable. XGBoost and Random Forest exhibited similar performance, which can be attributed to the simplicity of the dataset. Although XGBoost is generally expected to outperform Random Forest, especially with more complex data, the straightforward nature of this data led to both algorithms showing similar behavior.

1- Accuracy of Logistic Regression: 80.8 2- Accuracy of XGBoost: 79.9 3- Accuracy of Random Forest: 79.3

Decision Tree:

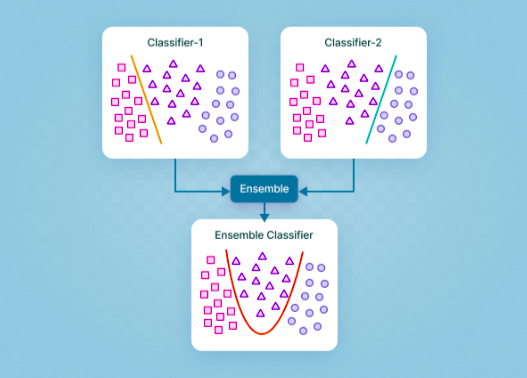

Ensemble Learning:

Bagging/Boosting:

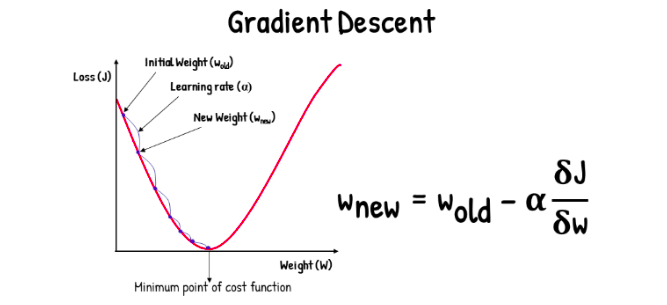

Gradient Descent:

GBoost:

In the competitive business world, predicting when a customer might leave—called churn—can help companies take action to keep them. This project focused on using machine learning to predict customer churn based on a dataset containing 7,043 customer records. The data included valuable insights like demographics, service usage, and past customer behavior. By accurately predicting churn, businesses can intervene and retain their customers, preventing losses.

The Approach: Three Machine Learning Algorithms To achieve our goal, we compared the performance of three popular machine learning algorithms:

XGBoost (Extreme Gradient Boosting) Random Forest Logistic Regression Each algorithm brought its own strengths to the table, and we wanted to see which one performed best in predicting whether a customer would stay or leave.

Prepping the Data for Success Before we could dive into modeling, we needed to prepare the dataset. Here’s how we tackled it:

Categorical to Numerical Conversion: Since machine learning models work best with numerical data, we converted all the categorical variables (like gender, type of service) into numbers. For example, “Male” might become 0 and “Female” 1. Simplification: We also dropped certain columns that weren’t necessary for the prediction process, focusing on the most important features for simplicity and clarity. Testing and Training: In each run, we randomly selected 100 rows of data as our test set, leaving the rest for training the model. This process was repeated 10 times, each time with a different set of 100 test rows. The final accuracy was reported as the average of these 10 test runs, ensuring a fair and reliable evaluation. The Algorithms Explained XGBoost: How it works: XGBoost is like a precision tool that builds multiple decision trees one after another. Each tree corrects the mistakes of the previous one, creating a model that learns from its own errors.

Random Forest: How it works: Random Forest is a collection of many decision trees that work together. Each tree looks at a random subset of the data and features, and the final prediction is made by combining all the trees’ results.

Logistic Regression: How it works: Logistic Regression is simple but powerful for binary classification tasks like churn (yes or no). It models the probability of churn based on a linear relationship between input features (like age, service type) and the likelihood of leaving.

Evaluating the Results: Who Won the Churn Prediction Race? After running the models, we found that Logistic Regression delivered the highest accuracy at 80.8%. This was followed closely by XGBoost at 79.9% and Random Forest at 79.3%. The results might seem surprising—typically, XGBoost would outperform both models, but in this case, the straightforward nature of the data favored Logistic Regression. The binary nature of churn (yes or no) made Logistic Regression the most effective, simple, and reliable choice for this problem.

Areas for Improvement: Where to Go Next Though the results were promising, there’s always room to improve. Here are a few ideas for future iterations:

More Data and Features: Increasing the number of records in the dataset and adding more features, like additional behavioral or demographic attributes, could enhance the model’s ability to capture customer behavior patterns. Categorical Grouping: Some columns divided customers into categories (e.g., five different customer types). Instead of dropping these columns, we could map these categories to numerical values and retain them in the model, allowing it to learn from more varied customer groups. Enhanced Feature Engineering: We could explore more advanced techniques to extract hidden patterns from the existing data, improving the accuracy of the models.

Conclusion: What We Learned Through this project, we learned that Logistic Regression was the most effective tool for predicting customer churn, thanks to its simplicity and the nature of the binary target variable. While XGBoost and Random Forest performed similarly, their strengths are often more apparent in more complex datasets. This project offers a solid foundation, but with more data and fine-tuning, we could further boost accuracy and uncover even deeper insights into customer behavior.