The goal is to develop a AI model that can detect human emotions including Angry, Disgust, Fear, Happy, Sad, Surprise and Neutral based an in image as the input. We have two different approaches here, One is to train a CNN (Convolutional neural network) from scratch using labeled images as training data and the other approach is to use a pre-trained model like DeepFace. In this article we are covering both options and compare the results. The first step to train a model is gathering the suitable data. In this case we need a dataset of images of faces labeled with the emotion they are expressing. I used msambare’s fer2013 dataset from keggle which includes two sets of test and train data with thousands of 48×48 images categorized in different directories labeled by the emotions. We create the model using TensorFlow’s Keras API and set up data generators that load and preprocess images from the specified directory then apply data augmentation (like rotation and flipping) and split the data into training and validation sets. Afterward we pass the now properly formatted data to the model we created and start the training process. It’s worth noting that we set the input layers shape size to 48×48 for several reasons:

- fer2013 is offering its images in this size

- While 48×48 may seem small, it’s generally sufficient to capture the key facial features necessary for emotion recognition

- The small size forces the model to focus on the most important facial features for emotion recognition, rather than potentially irrelevant background details.

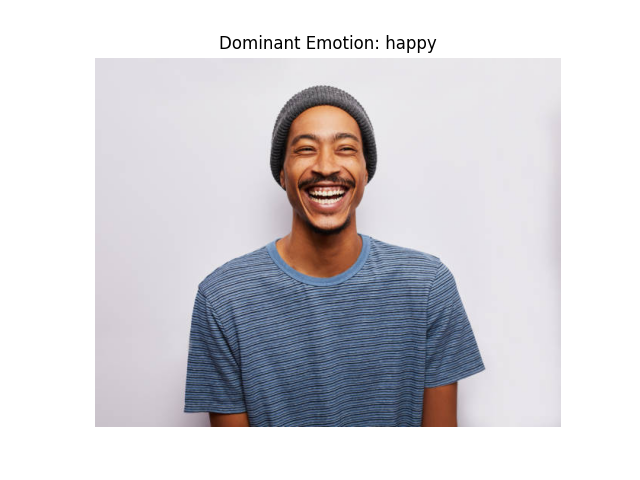

However with modern hardware and deep learning techniques, it’s possible to work with larger image sizes if needed. Some researchers now use larger sizes (e.g., 96×96 or 224×224) for emotion recognition, especially when applying transfer learning from models pre-trained on larger datasets. After that we also the training and validation accuracy/loss over epochs using plots and at last we pass an image path to use the now trained model to check the results (figure-1). The second approach which is using the DeepFace library, is much simpler as we just pass the image we want to it and get the emotion the model is the most confident about. It actually returns an object with 4 keys:

- emotion: an object consisting of the percentage of certainty of each emotion summing up to 100

- dominant_emotion: Its the emotions with the highest certainty percentage

- region: coordinates of the detected face

- face_confidence: the certainty of having [found] an actual face in the image

result object for the example we used: {'emotion': {'angry': 6.749350723661236e-19, 'disgust': 5.121609371489773e-33, 'fear': 1.1359427430087947e-21, 'happy': 99.9953031539917, 'sad': 8.247558876836933e-17, 'surprise': 6.363264359876553e-08, 'neutral': 0.004698441262007691}, 'dominant_emotion': 'happy', 'region': {'x': 234, 'y': 67, 'w': 137, 'h': 137, 'left_eye': None, 'right_eye': None}, 'face_confidence': 0.91}

In our quest to create the ultimate emotion-reading AI, we’re following two exciting paths to success! Let’s dive into this fascinating journey of teaching machines to understand human feelings.

Our first adventure begins with building our own CNN detective from scratch. We start by gathering our training materials – the fer2013 dataset from Kaggle, a treasure trove of facial expressions created by msambare. Picture thousands of 48×48 pixel photographs, each capturing one of seven emotional states: Angry, Disgust, Fear, Happy, Sad, Surprise, and Neutral.

In our high-tech training academy powered by TensorFlow’s Keras API, we construct our detective with sophisticated neural layers – think of it as building a digital brain! We start with convolutional layers to spot facial features, add pooling layers to focus on what’s important, and top it off with dense layers for making smart decisions. To keep our detective sharp, we use ReLU activation functions, with Softmax making the final call on emotions.

Before training begins, we prepare our image evidence carefully. We normalize the pixels (like adjusting the brightness of a photograph), encode our emotion labels, and use clever data augmentation tricks. It’s like teaching our detective to recognize faces from different angles – we rotate images, flip them horizontally, zoom in and out, and even adjust the lighting!

To ensure our detective doesn’t become too rigid in their thinking, we add dropout layers – it’s like teaching them to think flexibly about each case. We track their progress using detailed performance metrics, including confusion matrices and classification reports, making sure they’re learning the right lessons.

Meanwhile, on our second path, we have the DeepFace investigator – a seasoned professional with some impressive tricks up their sleeve. Not only can they spot emotions, but they can also guess age, detect gender, and handle multiple faces at once! They’re equipped with various investigation tools (VGG-Face, Facenet, OpenFace) and can even work in real-time, like a detective who never sleeps.

The results? A sophisticated emotion detection system that can read faces like an open book, whether you choose our custom-trained detective or the experienced DeepFace investigator. Each approach brings its own strengths to the table, giving us a powerful toolkit for understanding human emotions through the lens of artificial intelligence.

This is truly where science meets the art of reading human emotions, creating technology that brings us one step closer to machines that can understand how we feel!